Some weeks ago, Stefano Demiliani wrote an interesting post about “decoupling” (“Decoupling”: the misterious word for many Dynamics 365 Business Central partners. – Stefano Demiliani). He concluded:

And for you, Dynamics 365 Business Central PARTNERS: let’s not discredit the system by saying untrue things. There are no technological barriers today that could block a move to the cloud. At customers you must tell the truth and the inability of the individuals must not be masked as a limit in the platform, which has absolutely no limits in this sense today.

I completely agree. 👏

So I want to share with you my last experience with “decoupling” strategies in a complex environment with real time field equipments.

What is not possible to do in the Cloud…

The answer is simple: it’s not important for this discussion! What is possible and what is not possible in the Cloud, is a merely technical question. The only thing to know is that the all necessary tools are available to the developers!

…and what is possible?

We are talking about Artificial Intelligence for a year, I think is enough 😅 to explain what are today the benefits of Cloud.

In the mid-term period, it will be unlikely to have consumer hardware to run complex AI models, so once again: the future is Cloud!

The challenges

Last year, during a training session at the Polytechnic of Turin, I assigned to some students two challenges about “decoupling”:

- The Cloud system has limitations in the data ingestion rate

- The Cloud system can be unavailable for maintenance in some periods

We talked about a generic ERP Cloud system in a 7/7 days 24/24 hours production environment.

The “generic” case was indeed a real case 😅 and I was thinking to Business Central. At the time of that session, the operational limit of Business Central Cloud APIs were 600 requests per minute per environment. Moreover, you must agree with a six hour update window for system maintenance.

The students did very well and answered to challenges with “queue” and “middleware”: the base “bricks” of “decoupling”!

Queues

If the system cannot handle more than 600 request per minute, you can enqueue them and transmit all at once. You need an “enqueuer” for the input and a “dispatcher” for the output.

Here a simple example working with JSON messages, reducing the requests from three to one:

Middlewares

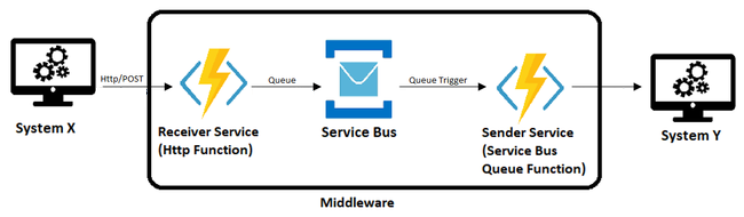

What is a “middleware”? Stuff between two systems to integrate them! Here an example using Microsoft tools:

The market is full of middlewares by various manufacturer, search for “MuleSoft” or “Boomi” for other examples.

On Premises Tools

Yeah, now is time to talk about On Premises! Many “decoupling” systems are described as Cloud-to-Cloud integrations. It’s normal for most operations, for example using Azure Functions to boost Business Central capabilities.

But in other scenarios you need an On Premises middleware that polls or receives request to or from the Cloud. And this is my last experience.

Pharmaceutical distribution

I chose two topics of this industry:

- Requests coming from drug store softwares

- Interfaces with automatic warehouses

During the peek hours, drug stores make thousands and thousands requests to distributor via Web Services. The typical requests are availability check and orders. For example, the above limit of 600 request per minute, is too low also for a small distributor.

Similarly, during the peek hours, ERP and WMS exchanges thousands and thousands lines of picking. The interfaces with automatic warehouses (KNAPP for example) are made in real time way, using sockets. Yeah: TCP sockets!

The minimum processes for the maximum result

I followed this rule “the minimum processes for the maximum result” about the middleware. I don’t want to replicate ERP logic in the middleware and I want to guarantee the centrality of ERP. I chose Microsoft platform to develop several middlewares per area, to minimize the points of failure.

Each middleware has its own database with the mininum data required for its processes. The data are continuosly replicated from and to ERP. I used Business Central API with JSON messages. If the ERP system is in maintenance, the middleware has the data to continue the operations.

The middlewares have a lightweight web interface (only for diagnostic) and share the same authentication.

Operations are orchestrated by Business Central but are executed asynchronously by middlewares.

The result: we are not scared about performances or unavalability of the ERP system.

Conclusions

The same as above 😅 so in this era, is not sufficent to change programming languages or software versions. Is mandatory to change the approach!